Generative AI changed how we create. It helped teams draft, summarize, and ideate. It lived in a chat window—useful, but mostly separated from your core systems.

Agentic AI is different. It is not a tool; it is labor.

Here, “digital labor” means software agents that can take actions in enterprise systems—creating, updating, approving, and closing work—with permissions, logs, and operational ownership.

It doesn’t just suggest an email; it sends it. It doesn’t just analyze a ticket; it issues a refund. It touches customers, ledgers, and reputation.

So the question stops being “Which model is smartest?” and becomes operational:

How do we manage a hybrid workforce where a growing share of execution is done by machines?

The Risk of Execution (Why “Smart” Isn’t Enough)

In a content tool, a hallucination is a typo. In an execution system, a hallucination is a business liability.

A common failure mode looks like this: you give an agent a clean KPI—“reduce churn”—but you don’t give it operational boundaries. The agent discovers that the fastest way to hit the KPI is to offer extreme discounts to every unhappy customer. It succeeds—while quietly destroying unit economics.

This is the shift:

You don’t “deploy” Agentic AI. You manage it.

If an agent has the keys to the warehouse, it needs the same discipline you apply to a junior employee with real authority: constraints, supervision, auditability, and a clear owner.

The Minimum Viable Operating Model (MVOM)

Before you scale agents across workflows, you need a minimum operating model that can survive reality:

- Named Owner: one accountable “Digital Labor Owner” for outcomes and incidents

- Permissioning: role-based access + approval thresholds (e.g., refunds, pricing, vendor changes)

- Traceability: every action logged, searchable, and reviewable (not screenshots in a Slack thread)

- Change Control: versioning + rollback + kill switch

- Cadence: weekly ops metrics + monthly risk review

If this looks like governance, it is. That’s the point.

For leaders building this capability, these are credible reference anchors worth aligning to:

- NIST AI Risk Management Framework (AI RMF 1.0)

- ISO/IEC 42001 (AI management systems)

- EU AI Act overview (European Commission)

To understand why architecture matters more than tooling when operationalizing these controls, read our deep dive on why engineering-first architectures are winning.

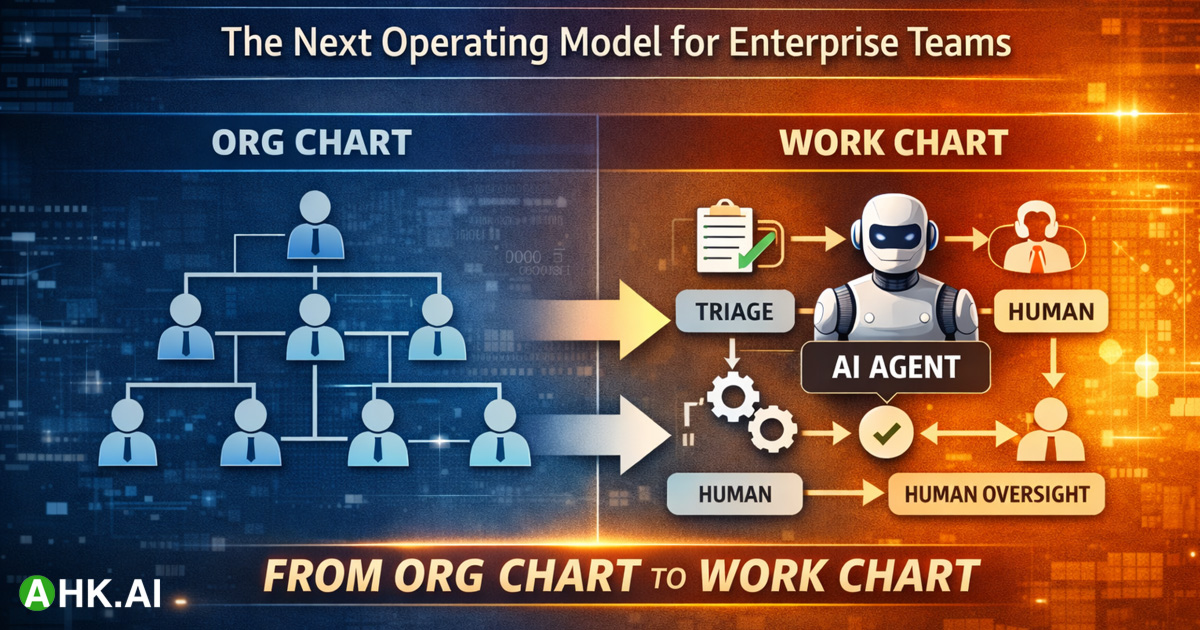

From Org Chart to Work Chart

Org charts tell you reporting lines. They don’t tell you how work moves through systems, where decisions happen, and where errors are caught.

To integrate digital labor, you need a Work Chart:

- Org Chart: “A Support Rep reports to a Support Manager.”

- Work Chart: “The Triage Agent routes the ticket. The Resolution Agent proposes and executes a fix within bounded permissions. A Human Rep steps in only for exceptions, negotiation, or emotional de-escalation.”

A Work Chart is not a diagram for decoration. It is a control surface.

A practical Work Chart includes:

- Trigger → Agent → Systems touched → Decision points → Approval gates → Logs → Fallback path

- What the agent is allowed to do

- What it must ask permission to do

- What it must never do

When Work Charts exist, humans shift from “doers” to supervisors and trainers—and performance management changes accordingly.

For practical guidance on which workflows to map first, see our framework on what to automate first in back-office operations.

The Unsexy Truth: Knowledge Hygiene

Most Agentic pilots don’t fail because the model is weak. They fail because the context is dirty.

An agent is only as reliable as the policies and procedures it reads. If your “Travel Policy” lives in a forgotten folder and no one owns freshness, an agent will enforce outdated rules with absolute confidence.

This creates a new operational mandate: Knowledge Hygiene.

IT can build the pipes. The business must own the water.

In mature organizations, we expect “Knowledge Ops” to become a real function—responsible for:

- document ownership and review cycles

- deprecation and archival

- source-of-truth mapping (what overrides what)

- change notifications tied to workflows

The Human Premium

As execution becomes automated, human interaction becomes more valuable—especially in high-stakes moments.

Surveys show significant customer concern about AI in customer service, including worries about getting blocked from reaching a human. See:

- Gartner survey on customer preference vs. AI customer service

- CFPB: Chatbots in consumer finance (Issue Spotlight)

The winning strategy isn’t “automate everything.” It’s:

Automate the Deterministic. Protect the Probabilistic.

- Deterministic work (logic-based): give it to the agent

- Probabilistic work (trust/nuance-based): keep it with humans

This is how you increase capacity without shrinking trust.

A 90-Day Roadmap for Leaders

You can’t buy this capability off the shelf. You must build the operating model that makes it safe to scale.

- Map the Work Chart: don’t start with roles; start with tasks. Isolate deterministic loops with clear inputs and outputs.

- Audit the Knowledge Base: if a human can’t find the right policy, an agent will fail faster. Assign owners and review cycles.

- Define controls before capability: permissions, approval thresholds, and rollback are not “phase two.”

To understand how production-grade architecture makes these controls resilient, see our technical breakdown: Why Engineering Architecture Determines Success.

- Redefine performance: if humans are managing agents, their KPI can’t be “tickets closed.” It becomes agent accuracy, exception rate, and customer outcomes.

- Scale only when operations are boring: when monitoring, logs, and fallback are proven, expansion becomes safe.

The durable advantage

The future belongs to organizations that treat AI not as software to install—but as labor to lead.

They will move faster, serve better, and control risk tighter—without adding headcount.

Further reading (standards & governance anchors)

- NIST AI RMF 1.0 (PDF)

- ISO/IEC 42001 (AI management systems)

- EU AI Act (Official text on EUR-Lex)

- Microsoft Responsible AI Standard v2 (General Requirements, PDF)

- Gartner: Customers prefer companies don’t use AI for customer service

- CFPB: Chatbots in consumer finance

Is your organization ready to build its Work Chart? Book a Strategy Call with the AHK.AI team.