Enterprise AI Automation ≠ Tools: Why Engineering Architecture Determines Success

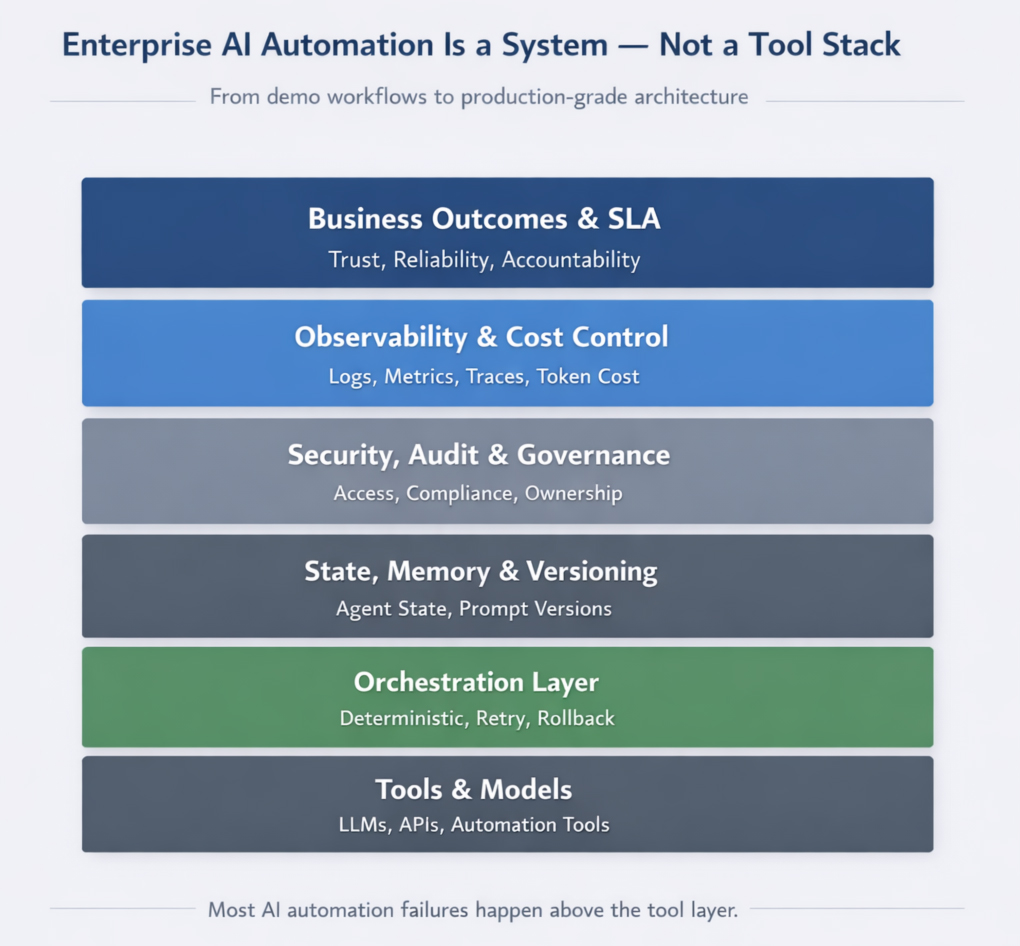

Most enterprise AI automation failures aren’t caused by model quality or tool choice. They fail for a simpler reason: there’s no production-grade engineering underneath.

The Core Misconception: Automation Is Not a Tool Stack

In recent years, “AI automation” has become synonymous with tools. Zapier, n8n, Copilot, agent frameworks they all promise speed, flexibility, and low-code productivity. And for demos and proofs of concept, they work.

But enterprises don’t fail at automation because tools are weak. They fail because tools are mistaken for systems.

Tools execute steps. Architecture governs behavior under scale, failure, and change.

This distinction becomes critical once automation moves from experimentation to production. Martin Fowler’s writing on software architecture has covered this gap for decades, yet it’s routinely ignored in AI automation projects.

Why Enterprise AI Automation Fails After the POC Phase

Across large organizations, failed AI automation initiatives share the same pattern:

The POC succeeds quickly. Initial productivity gains appear. Then real-world complexity shows up and reliability starts to break.

This isn’t a tooling problem it’s an architecture vacuum.

Common failure points we see repeatedly:

- Stateless workflows with no recovery guarantees. When something breaks, there’s no way to resume. You start over or give up.

- No versioning or rollback strategy. A prompt change goes live, breaks production logic, and there’s no easy way back.

- Missing audit trails for AI decisions. Six months later, legal asks “why did the system do X?” and nobody knows.

- No ownership of infrastructure or data flow. The vendor controls it all. You’re just a tenant.

- Costs scaling faster than business value. Token usage explodes with no visibility or control.

The Google SRE team described this pattern years ago: “Reliability is a feature of the system, not the result of individual components.” This applies perfectly to AI automation.

Tools Scale Linearly. Enterprises Do Not.

Most automation tools assume single-tenant logic, happy-path execution, and minimal governance requirements.

Enterprises operate under the opposite assumptions: multi-team ownership, regulatory oversight, partial failures as the norm, and long-lived systems with evolving requirements.

This mismatch explains why many “successful” automations collapse at scale. As the AWS Well-Architected Framework puts it: “You architect for failure, not for success.”

What Engineering Architecture Actually Means in AI Automation

Enterprise AI automation isn’t a workflow it’s a distributed system with AI components. A production-grade architecture typically includes:

Orchestration, Not Chaining

Deterministic state transitions, idempotent execution, and retry/compensation logic. Compare this with simple trigger-based chains that break when anything unexpected happens. Temporal’s approach to durable workflows demonstrates what real orchestration looks like.

State, Memory, and Version Control

Persistent agent memory, versioned prompts and models, reproducible execution states. Without this, debugging becomes impossible. You can’t replay a failure if you don’t know what state the system was in. LlamaIndex’s documentation on stateful agent systems covers this well.

Security, Auditability, and Ownership

Enterprises need answers to questions like: Who accessed which data? Which model version generated this output? Can we explain this decision six months later?

These aren’t “nice-to-haves.” They’re governance requirements that regulators and auditors will ask about. The NIST AI Risk Management Framework provides structure here.

Observability and Cost Control

AI automation without observability becomes unmanageable at scale. Metrics must include latency, error rates, token usage, and cost per business outcome.

OpenTelemetry has become the industry standard for observability, and it applies to AI systems just as much as traditional distributed systems.

Why Engineering-First Teams Outperform Tool-First Agencies

The market is diverging here.

Tool-first agencies optimize for: speed of deployment, visual demos, low upfront effort.

Engineering-first teams optimize for: system durability, internal ownership, long-term cost control, compliance and audit readiness.

This isn’t a difference in preference it’s a difference in operating model. As McKinsey’s research on AI at scale shows, sustainable AI value comes from system integration, not isolated tooling.

Enterprise AI Automation Is Becoming Infrastructure

The most important shift underway is this: AI automation is no longer a productivity layer. It’s becoming enterprise infrastructure.

Infrastructure demands engineering discipline, ownership clarity, and architectural foresight.

The organizations that succeed in AI automation won’t be those who adopted the most tools. They’ll be those who designed the most resilient systems.

Final Thought

Tools will continue to improve. Models will continue to evolve. But architecture is what determines whether AI automation survives contact with reality.

Enterprise AI automation isn’t about what you connect it’s about what you can trust when things go wrong.

Building production AI systems? Talk to our engineering team about architecture-first automation.